Leaderboard

|

Agent

|

% Progress

|

BabyAI

|

Crafter

|

TextWorld

|

BabaIsAI

|

MiniHack

|

NetHack

|

Date

|

|---|---|---|---|---|---|---|---|---|

|

🕹️ ✔️ Qwen2.5-72B-it |

16.2 ± 1.6 |

34.0 ± 6.7 |

27.3 ± 3.6 |

11.2 ± 3.8 |

19.3 ± 3.6 |

5.0 ± 3.4 |

0.3 ± 0.3 |

2024-11-25 |

|

🕹️ ✔️ Claude-3.5-Sonnet-2024-10-22 |

32.6 ± 1.9 |

68.0 ± 6.6 |

32.7 ± 3.2 |

42.1 ± 5.4 |

37.5 ± 4.4 |

15.0 ± 5.6 |

0.6 ± 0.5 |

2024-11-11 |

|

🥈 🕹️ ✔️ Grok-4 |

43.6 ± 2.2 |

76.0 ± 6.0 |

57.3 ± 3.9 |

62.9 ± 7.9 |

45.8 ± 4.5 |

17.5 ± 6.0 |

1.8 ± 0.8 |

2025-07-23 |

|

🕹️ ✔️ Gemini-1.5-Flash-002 |

14.6 ± 1.4 |

50.0 ± 7.1 |

20.0 ± 0.7 |

0.0 ± 0.0 |

12.8 ± 2.3 |

5.0 ± 3.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Llama-3.1-8B-it |

15.1 ± 1.6 |

36.0 ± 6.8 |

25.5 ± 3.2 |

6.1 ± 2.4 |

18.3 ± 3.5 |

5.0 ± 3.4 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Qwen-2.5-7B-it |

7.8 ± 1.1 |

14.0 ± 4.9 |

16.4 ± 3.0 |

3.9 ± 1.0 |

12.5 ± 3.0 |

0.0 ± 0.0 |

0.0 ± 0.0 |

2024-11-25 |

|

🕹️ ✔️ DeepSeek-R1-Distill-Qwen-32B |

19.5 ± 1.6 |

48.0 ± 7.1 |

15.0 ± 2.1 |

10.6 ± 3.7 |

40.0 ± 4.5 |

2.5 ± 2.5 |

0.7 ± 0.4 |

2025-01-26 |

|

🕹️ ✔️ Microsoft-Phi-4 |

11.6 ± 1.4 |

32.0 ± 6.6 |

13.6 ± 2.7 |

2.5 ± 0.9 |

16.7 ± 3.4 |

5.0 ± 3.4 |

0.0 ± 0.0 |

2025-01-13 |

|

🕹️ ✔️ Reka-Flash-3 |

29.2 ± 1.8 |

76.0 ± 6.0 |

33.6 ± 3.5 |

11.6 ± 4.6 |

43.3 ± 4.5 |

10.0 ± 4.7 |

0.6 ± 0.3 |

2025-03-13 |

|

🕹️ ✔️ Gemini-2.5-Flash |

33.5 ± 2.2 |

68.0 ± 6.6 |

40.0 ± 4.8 |

29.6 ± 7.2 |

50.0 ± 4.6 |

12.5 ± 5.2 |

0.8 ± 0.4 |

2025-07-22 |

|

🕹️ ✔️ Qwen2-VL-7B-it |

3.7 ± 0.8 |

4.0 ± 2.8 |

6.4 ± 1.7 |

1.6 ± 0.6 |

7.6 ± 2.4 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-25 |

|

🕹️ ✔️ Grok-3-beta |

29.5 ± 2.2 |

62.0 ± 6.9 |

25.0 ± 4.1 |

32.5 ± 6.9 |

33.3 ± 4.3 |

22.5 ± 6.6 |

1.6 ± 0.4 |

2025-04-25 |

|

🕹️ ✔️ Llama-3.2-3B-it |

10.1 ± 1.3 |

20.0 ± 5.7 |

17.3 ± 2.8 |

3.5 ± 1.1 |

17.5 ± 3.5 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Llama-3.3-70B-it |

23.0 ± 1.7 |

66.0 ± 6.7 |

28.6 ± 4.1 |

9.0 ± 2.9 |

29.2 ± 4.1 |

5.0 ± 3.4 |

0.4 ± 0.3 |

2024-12-09 |

|

🕹️ ✔️ GPT-4o-2024-05-13 |

32.3 ± 1.5 |

77.6 ± 3.7 |

33.1 ± 2.3 |

39.3 ± 5.2 |

33.7 ± 3.3 |

10.0 ± 4.7 |

0.4 ± 0.4 |

2024-11-11 |

|

🕹️ ✔️ GPT-5-minimal-think |

32.8 ± 2.2 |

80.0 ± 5.7 |

39.1 ± 4.1 |

30.6 ± 7.0 |

25.8 ± 4.0 |

20.0 ± 7.3 |

1.3 ± 0.5 |

2025-08-19 |

|

🕹️ ✔️ Gemini-1.5-Pro-002 |

21.0 ± 1.2 |

58.4 ± 4.4 |

30.2 ± 2.9 |

0.0 ± 0.0 |

32.0 ± 3.3 |

5.0 ± 3.5 |

0.4 ± 0.4 |

2024-11-11 |

|

🕹️ ✔️ Qwen2-VL-72B-it |

12.8 ± 1.6 |

24.0 ± 6.0 |

22.7 ± 2.7 |

16.5 ± 5.4 |

10.8 ± 2.8 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-25 |

|

🕹️ ✔️ Mistral-Nemo-it-2407 |

17.6 ± 1.5 |

50.0 ± 7.1 |

27.7 ± 2.7 |

4.5 ± 1.3 |

20.8 ± 3.7 |

2.5 ± 2.5 |

0.3 ± 0.3 |

2024-12-09 |

|

🕹️ ✔️ Llama-3.1-70B-it |

27.9 ± 1.4 |

73.2 ± 4.0 |

31.2 ± 2.7 |

15.0 ± 4.6 |

40.0 ± 3.4 |

7.5 ± 4.2 |

0.3 ± 0.3 |

2024-11-11 |

|

🕹️ ✔️ GPT-4o-mini-2024-07-18 |

17.4 ± 1.4 |

50.4 ± 4.5 |

15.9 ± 2.0 |

12.2 ± 3.5 |

15.6 ± 2.5 |

10.0 ± 4.7 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Llama-3.2-90B-it |

27.3 ± 1.4 |

72.0 ± 6.3 |

31.7 ± 1.4 |

11.2 ± 3.0 |

43.9 ± 3.5 |

5.0 ± 3.4 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Claude-3.5-Haiku-2024-10-22 |

19.3 ± 1.8 |

52.0 ± 7.1 |

26.4 ± 2.8 |

18.0 ± 5.8 |

8.3 ± 2.5 |

10.0 ± 4.7 |

1.2 ± 0.4 |

2024-12-11 |

|

🥇 🕹️ ✔️ Gemini-3-Flash |

48.1 ± 2.4 |

86.0 ± 4.9 |

45.0 ± 6.3 |

50.2 ± 8.1 |

73.3 ± 4.0 |

30.0 ± 7.2 |

4.0 ± 0.8 |

2026-02-13 |

|

🕹️ ✔️ Llama-3.2-11B-it |

16.8 ± 1.5 |

50.0 ± 7.1 |

26.2 ± 3.3 |

6.7 ± 2.2 |

15.6 ± 2.5 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ DeepSeek-R1 |

34.9 ± 2.1 |

74.0 ± 6.2 |

36.4 ± 3.8 |

21.8 ± 6.1 |

50.8 ± 4.6 |

25.0 ± 6.8 |

1.4 ± 0.5 |

2025-04-10 |

|

🥉 🕹️ ✔️ Gemini-2.5-Pro-Exp-03-25 |

43.3 ± 2.3 |

80.0 ± 5.7 |

55.0 ± 6.0 |

49.2 ± 8.2 |

56.7 ± 4.5 |

17.5 ± 6.0 |

1.7 ± 0.2 |

2025-04-25 |

|

🕹️ ✔️ Llama-3.2-1B-it |

6.6 ± 1.0 |

8.0 ± 3.8 |

12.7 ± 1.9 |

3.3 ± 0.9 |

10.8 ± 2.8 |

5.0 ± 3.4 |

0.0 ± 0.0 |

2024-11-11 |

|

Agent

|

% Progress

|

BabyAI

|

Crafter

|

BabaIsAI

|

MiniHack

|

NetHack

|

Date

|

|---|---|---|---|---|---|---|---|

|

🥈 🕹️ ✔️ Claude-3.5-Sonnet-2024-10-22 |

35.5 ± 2.0 |

82.0 ± 5.4 |

37.3 ± 3.1 |

34.5 ± 4.4 |

22.5 ± 6.6 |

1.2 ± 0.4 |

2024-11-11 |

|

🕹️ ✔️ Gemini-1.5-Flash-002 |

14.9 ± 1.4 |

43.2 ± 4.4 |

20.7 ± 4.4 |

8.3 ± 1.9 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Qwen2-VL-7B-it |

4.4 ± 0.8 |

2.0 ± 2.0 |

5.5 ± 0.9 |

11.9 ± 3.0 |

5.0 ± 3.4 |

0.0 ± 0.0 |

2024-11-25 |

|

🕹️ ✔️ GPT-4o-2024-05-13 |

22.6 ± 1.4 |

62.0 ± 4.3 |

26.8 ± 3.7 |

18.6 ± 2.7 |

5.0 ± 3.4 |

0.4 ± 0.4 |

2024-11-11 |

|

🥉 🕹️ ✔️ Gemini-1.5-Pro-002 |

25.8 ± 1.4 |

58.4 ± 4.4 |

33.5 ± 2.1 |

31.4 ± 3.2 |

5.0 ± 3.4 |

0.5 ± 0.5 |

2024-11-11 |

|

🕹️ ✔️ Qwen2-VL-72B-it |

12.2 ± 1.6 |

34.0 ± 6.7 |

18.6 ± 2.8 |

5.9 ± 2.2 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-25 |

|

🕹️ ✔️ Llama-3.2-90B-it |

21.0 ± 1.6 |

66.0 ± 6.7 |

14.5 ± 1.8 |

21.9 ± 2.9 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🕹️ ✔️ Llama-3.2-11B-it |

8.4 ± 1.3 |

18.0 ± 5.4 |

15.9 ± 1.2 |

5.8 ± 1.6 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

|

🥇 🕹️ ✔️ Gemini-2.5-Pro-Exp-03-25 |

35.7 ± 2.1 |

74.0 ± 6.2 |

37.3 ± 4.6 |

53.3 ± 4.6 |

12.5 ± 5.2 |

1.6 ± 0.4 |

2025-04-25 |

|

🕹️ ✔️ GPT-4o-mini-2024-07-18 |

15.4 ± 1.3 |

38.0 ± 4.3 |

19.9 ± 3.1 |

16.4 ± 2.6 |

2.5 ± 2.5 |

0.0 ± 0.0 |

2024-11-11 |

- The % Progress metric refers to the average

completion

percentage of BALROG

environments of the model.

- ✔️ Checked indicates that we, the BALROG team,

received

access to the system and

were able to reproduce the patch generations.

- 🕹️ Open refers to submissions that have

open-source

code. This

does not

necessarily mean the underlying model is open-source.

- The leaderboard is updated once a week on Monday.

- If you would like to submit your model to the leaderboard, please check the submission page.

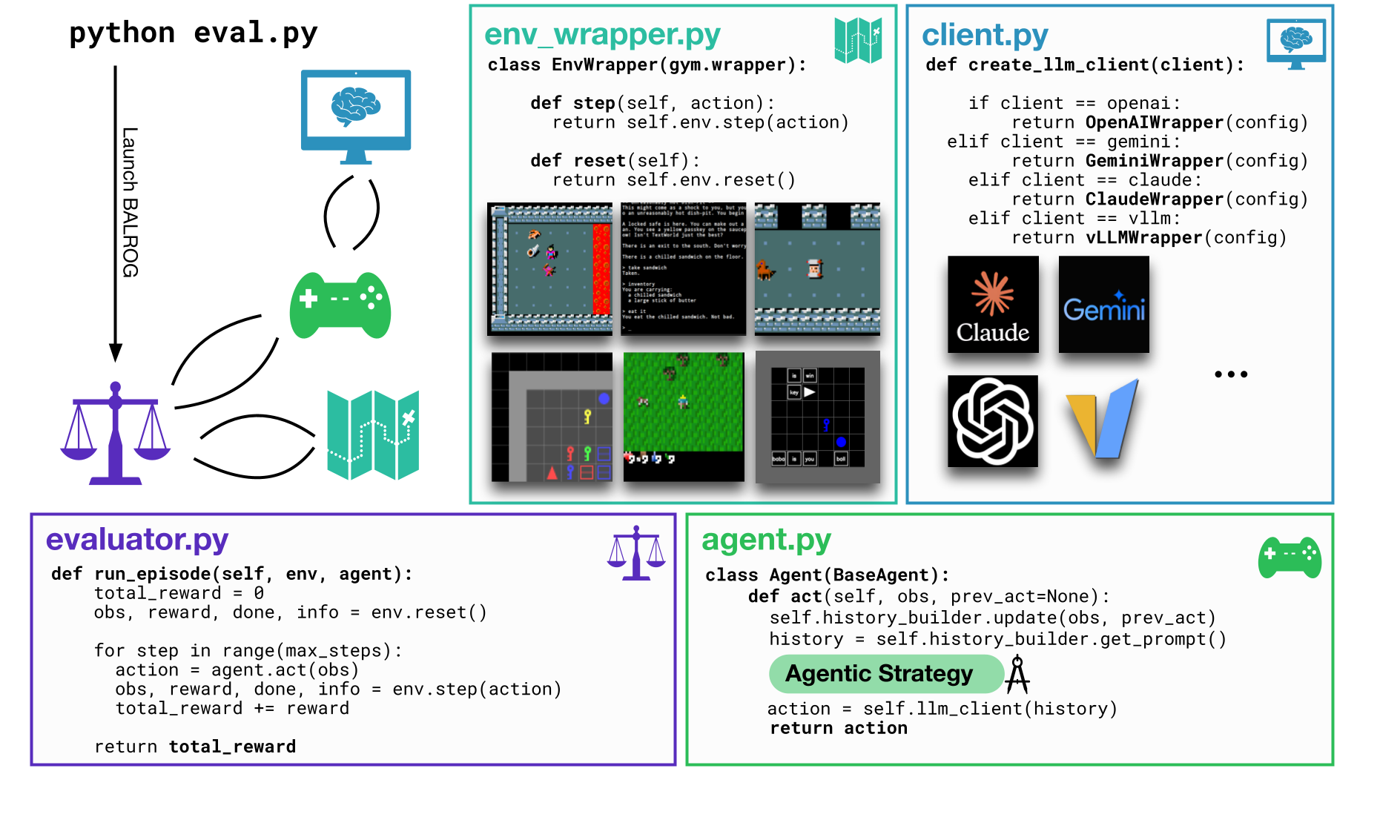

About

BALROG is a benchmark designed to evaluate the agentic capabilities of large language and vision-language models (LLMs and VLMs) on long-horizon tasks, testing their ability to plan, reason spatially, and explore in dynamic environments. Our benchmark reveals that while current models show some success on simpler tasks, they struggle with more complex, procedurally generated environments like NetHack, especially when vision-based decision-making is involved. We provide an open, fine-grained evaluation framework to drive progress in autonomous agent research. Read more about BALROG in our paper!

Citation

@inproceedings{paglieri2025balrog,

title={{BALROG}: Benchmarking Agentic {LLM} and {VLM} Reasoning On Games},

author={Davide Paglieri and Bart{\l}omiej Cupia{\l} and Samuel Coward and Ulyana Piterbarg and Maciej Wolczyk and Akbir Khan and Eduardo Pignatelli and {\L}ukasz Kuci{\'n}ski and Lerrel Pinto and Rob Fergus and Jakob Nicolaus Foerster and Jack Parker-Holder and Tim Rockt{\"a}schel},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025},

url={https://openreview.net/forum?id=fp6t3F669F}

}

Usage: BALROG's website and leaderboard use the template made available by SWE-bench. If you would like to use this template for your own leaderboard, please visit their website and request permission

Correspondence to: d.paglieri@cs.ucl.ac.uk